Fulldome Media Environment

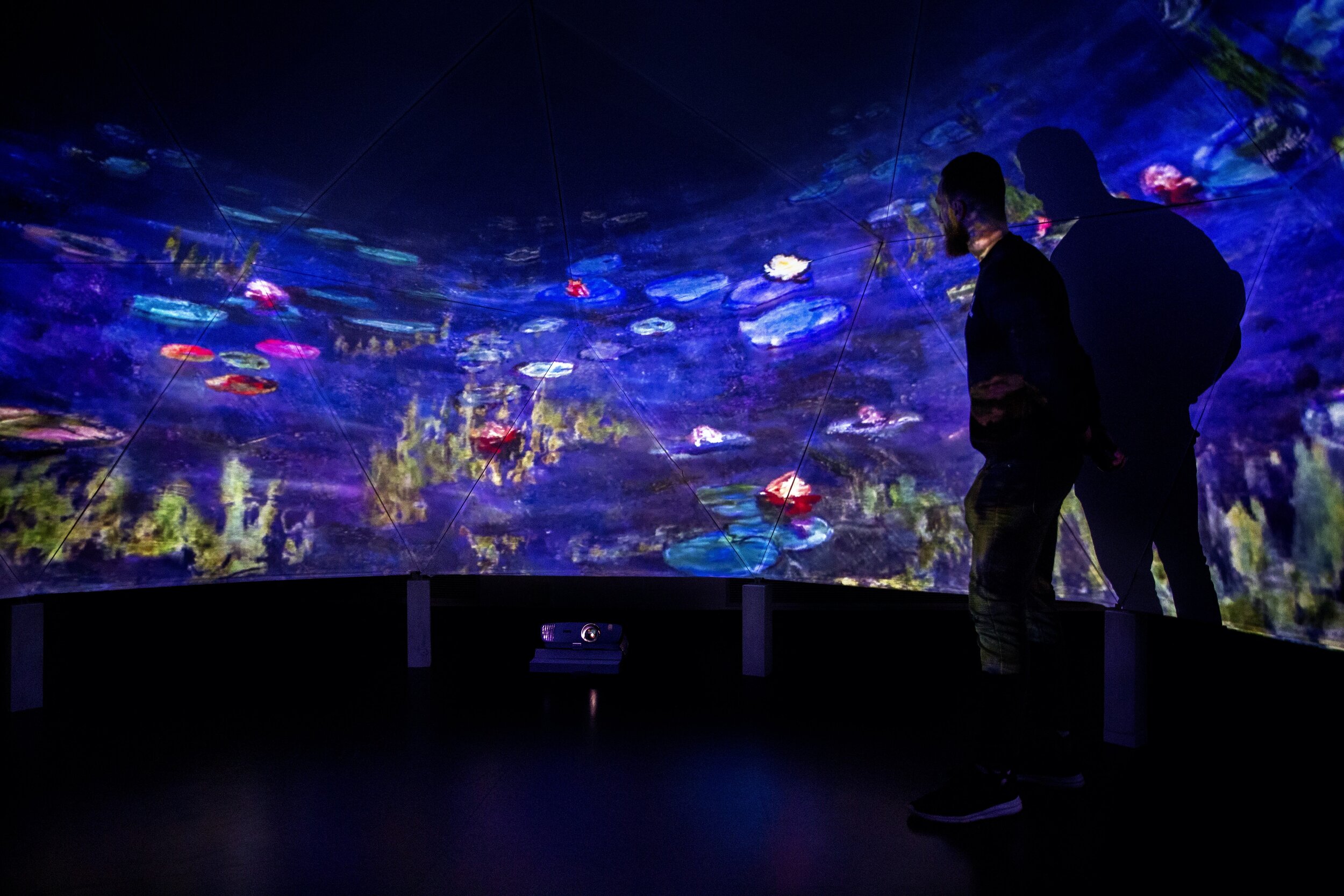

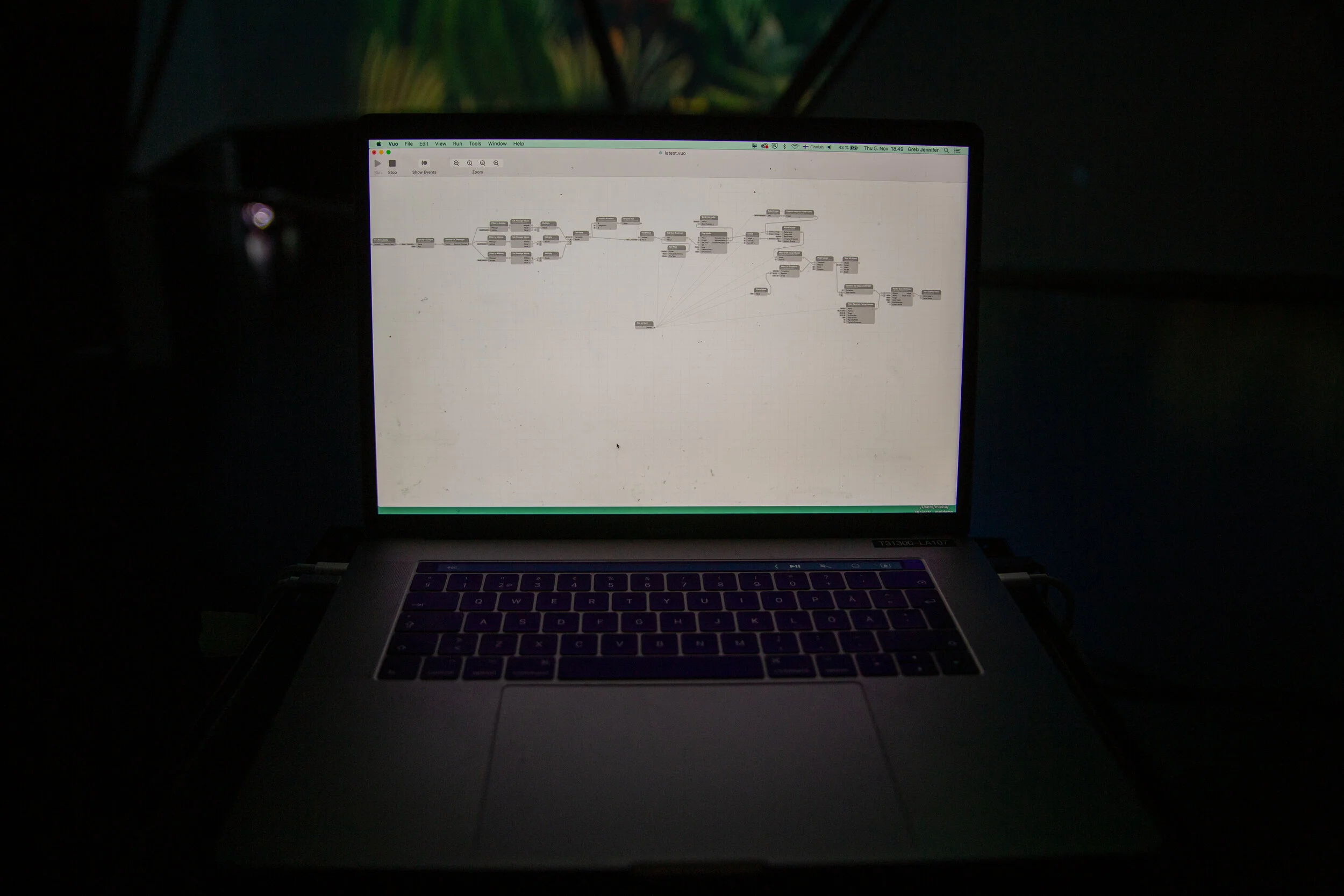

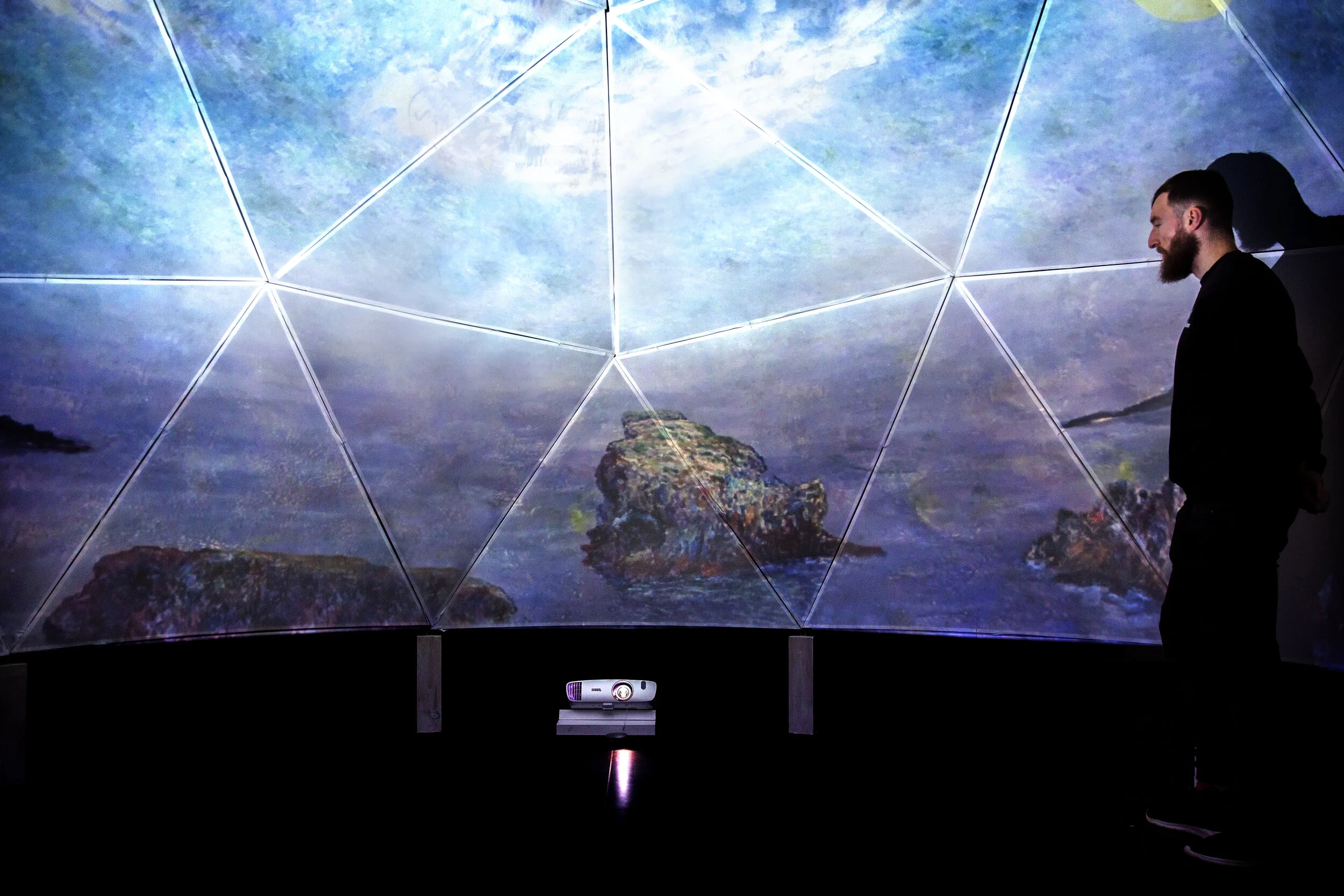

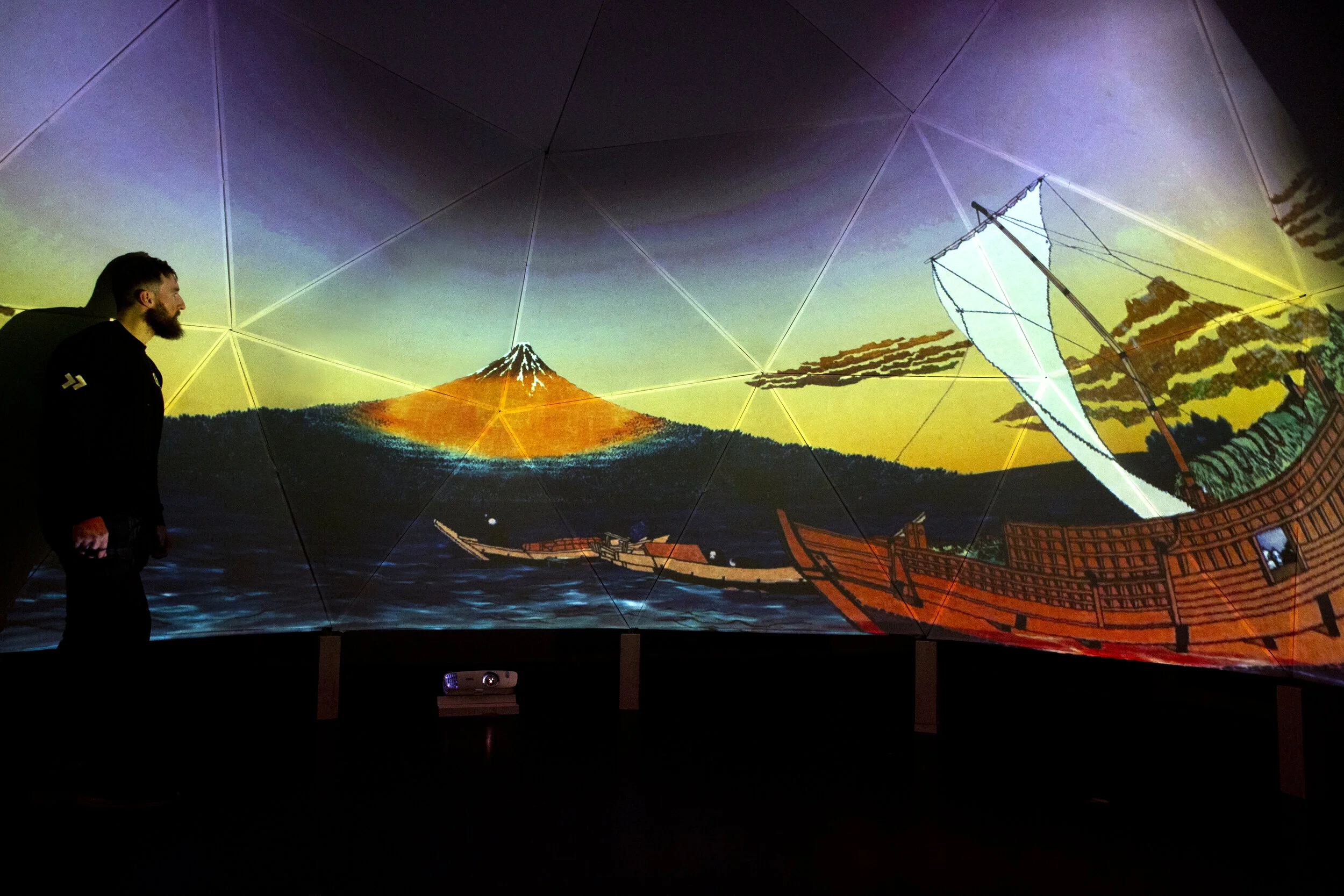

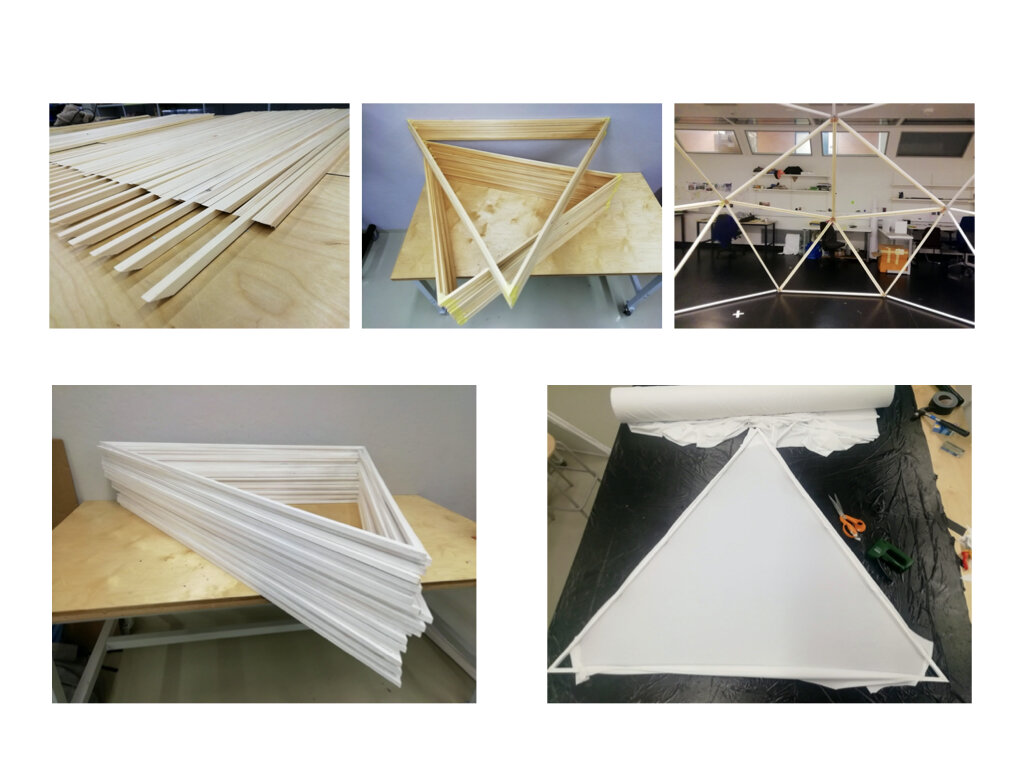

The video and gallery showcase the outcome of the construction of a five-meter diameter, three-meter tall, fulldome theatre and approximately nine minutes of animated media. Furthermore, a user interface was developed that allows visitors to control the playback of the animations inside the dome.

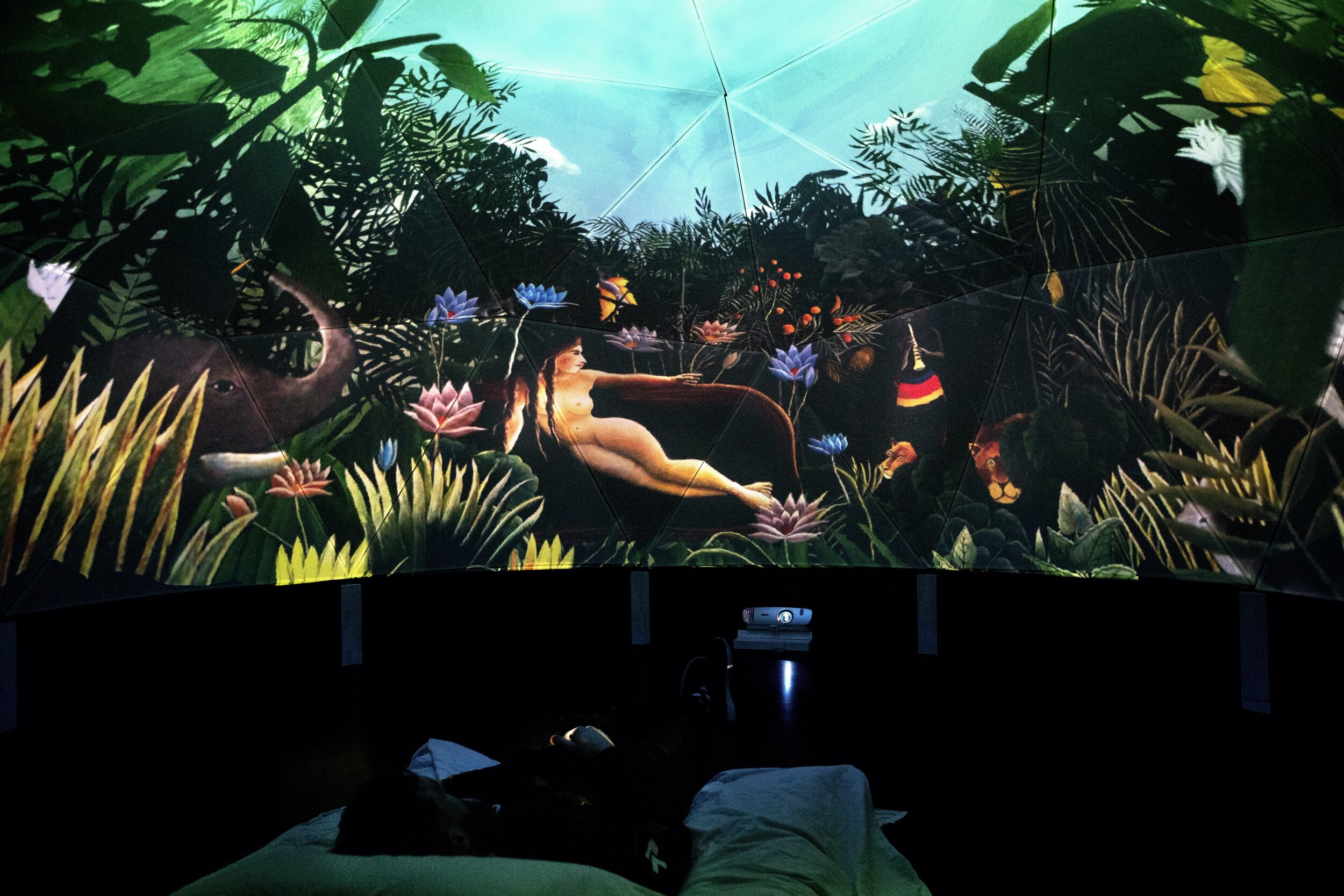

When visitors enter the dome, they are surrounded by three equidistant virtual paintings floating in the spherical theatre. The iconic selection of paintings includes: “Water Lillies” by Claude Monet, “The Great Wave off Kanagawa” by Hokusai, and “The Dream” by Henri Rousseau. Through the use of a cellphone application, users can select the paintings to playback one at a time. Once selected, the media dissolves into a panoramic tableau of an expansive scene, composited from multiple images from the individual artists’ collection of works. The frame of the painting, both visually and figuratively, recedes as imagery surrounds the audience. Each scene builds upon the original artwork, by digitally synthesizing the image with other images from the artist’s body of work. These scenes are delicately animated with various layers of the painting, transforming dynamically in three-dimensional space. Each animated scene runs for approximately two to three minutes and is accompanied by audio. Once an animation has concluded, it dissolves to the opening scene, where the visitor can again select an animated sequence from one of the three artworks.

Featured below are three animations created for the fulldome environment, presented in virtual reality via Youtube VR. When viewing through a desktop screen you can move your mouse to change the direction of the camera around the scene. When viewing the animations through a cellphone you can move the camera via your finger or through changing the direction of the phone itself. The audio for these animations was created by Sharif Jamaldin.

NEW MEDIA DEMOS

As a masters student in New Media Design and Production, I have studied a variety of production methods including virtual reality, 360-degree filmmaking, augmented reality, projected interfaces, and electronic sculptures. The short and intensive 3-week course structure embodies the MIT Media Lab’s motto, “Demo or Die.” These projects are the result of the rapid application of new technologies and programs, offering a glimpse into the overwhelming possibilities to be explored in these emerging mediums. The documentation of these demos aims to highlight my recent fields of inquiry and experimentation in the Media Lab at Aalto University.

LUCID DREAMING

Lucid Dreaming is a collaborative multimedia installation created by Jennifer Greb, Anna Puura and Julia Sand. The installation investigates the phenomena of awakening into the subconscious.

When visitors step into the space they encounter a bed and immersive projection. Interactive floor and wall projection, created by Julia Sand, reacts to visitors movements throughout the space. When visitors stand on the streaming particles, the particles move around the visitor and change color. Calming bell sounds softly ring when new motion is triggered. The projection moves around the bed, inviting those who are watching into a meditative contemplation.

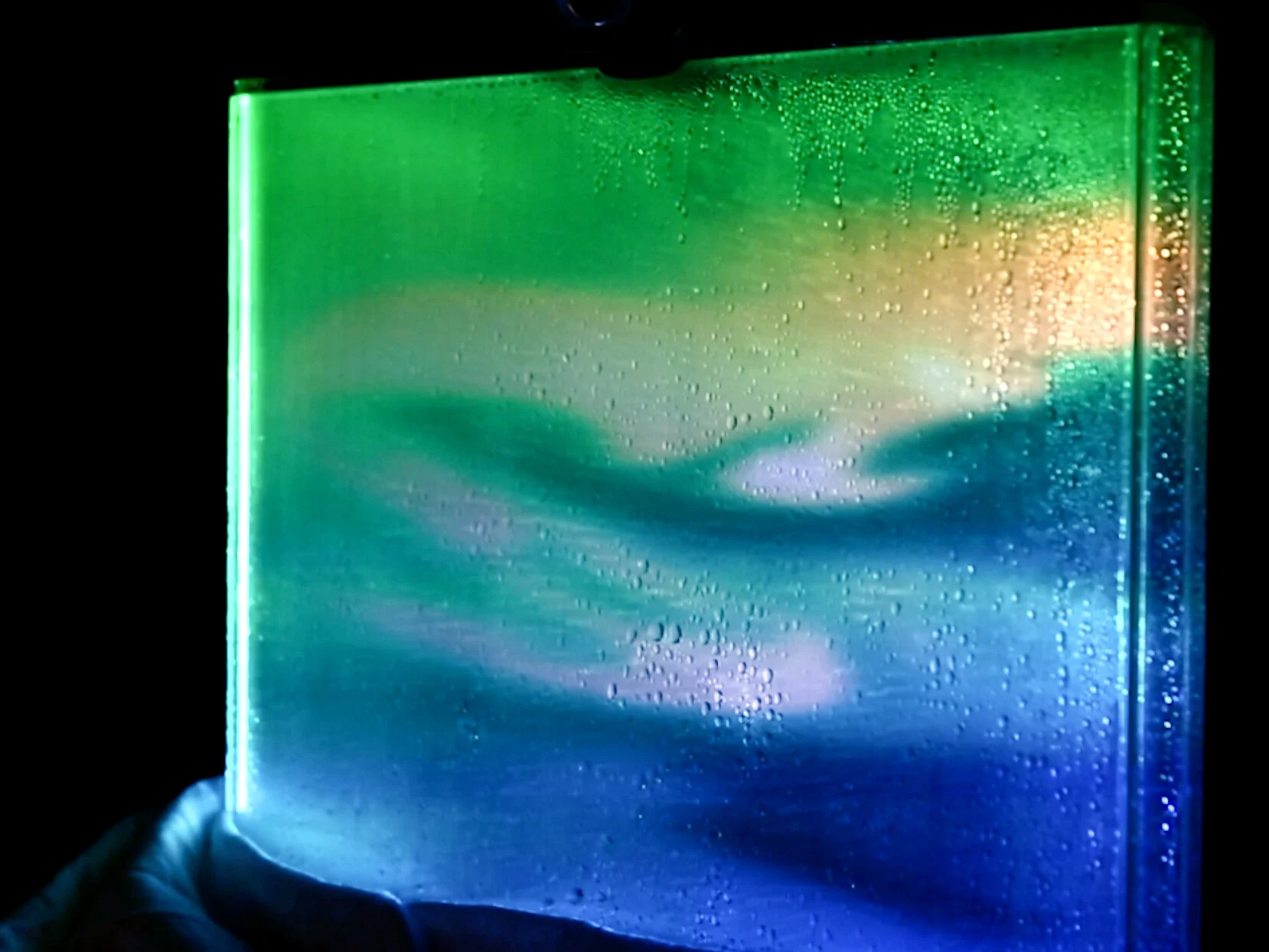

One at a time, visitors are invited to lay down on the bed and put on a Muse headband. This device reads the brain waves of the participant and transmits this data over bluetooth and OSC to Processing. Processing compares the relative frequencies of Alpha and Beta brain waves and displays historic dream imagery in relation to the brain activity. These images are successively back-projected through a fabricated water vapor screen, which is parallel to, and directly above, the participant.

As a result of this experience, crafted by Jennifer Greb and Anna Puura, visitors witness a visualization of their brain waves in real time. Lying in the dark with a cool mist overhead, the user becomes calm and lulled into a relaxed state, reminiscent of dreaming. During this state, surreal images are suspended in an overhead mist, providing an ethereal texture which the visitor can touch and manipulate. The realization that the selection of images is dependent on the brain waves of the visitor promotes a feeling of clairvoyance and intimacy, facilitating a window into the subconscious.

INTERACTIVE FLUID SIMULATION

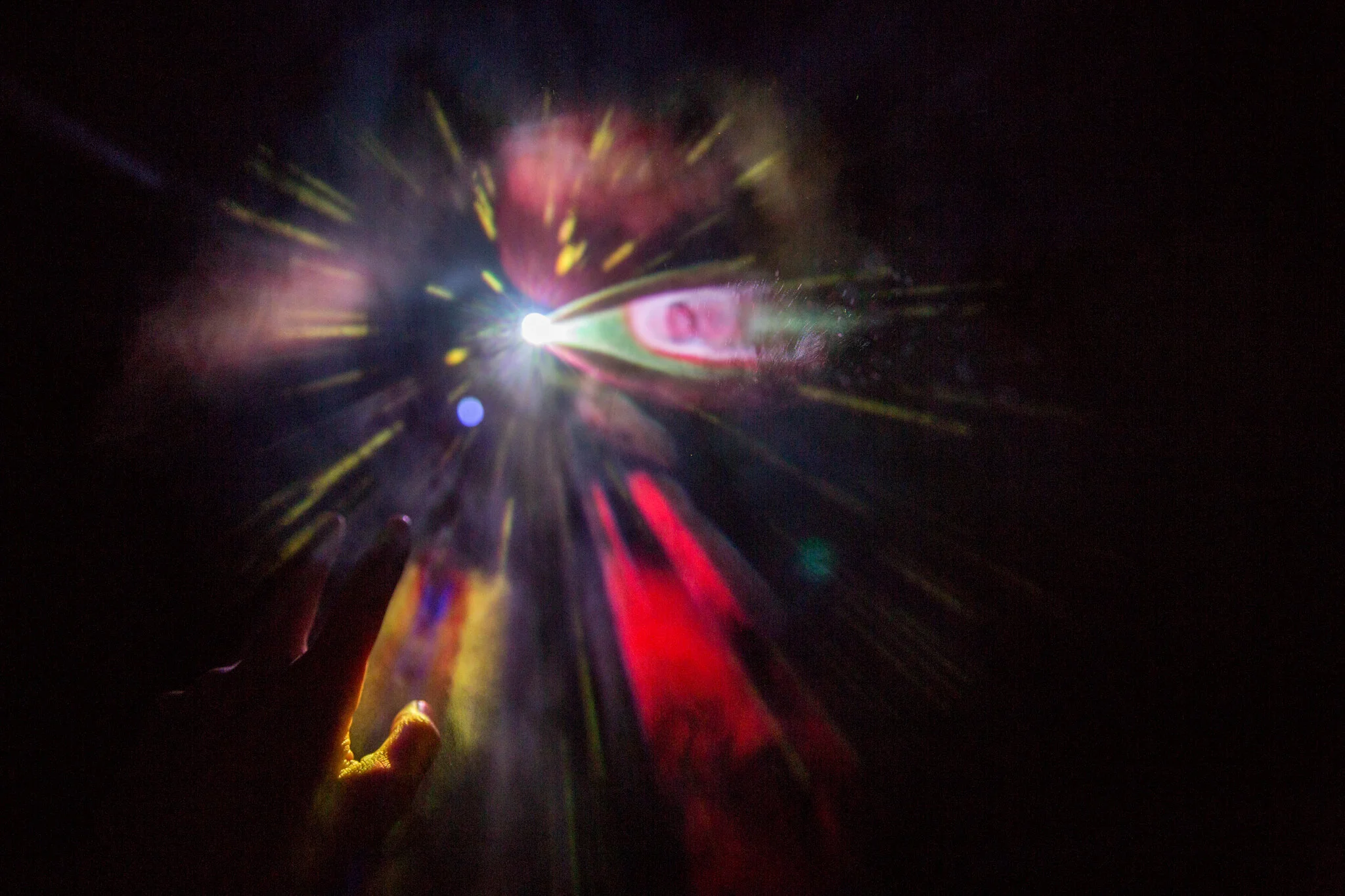

The objective of this demo was to project an interactive code-generated fluid simulation on actual fluid. This was achieved by creating a water vapour screen, encapsulated in glass, and back-projecting the real-time fluid visualization onto the surface.

When a viewer stands in front of the projection, they are tracked using a Webcam and Posenet, a machine-learning model that facilitates skeleton tracking. The data obtained from Posenet is used to influence the fluid simulation, which is generated in real-time utiizing p5.js, This allows the viewer to manipulate the projected fluid simulation with their movement.

LOTUS FLOWER IN AUGMENTED REALITY

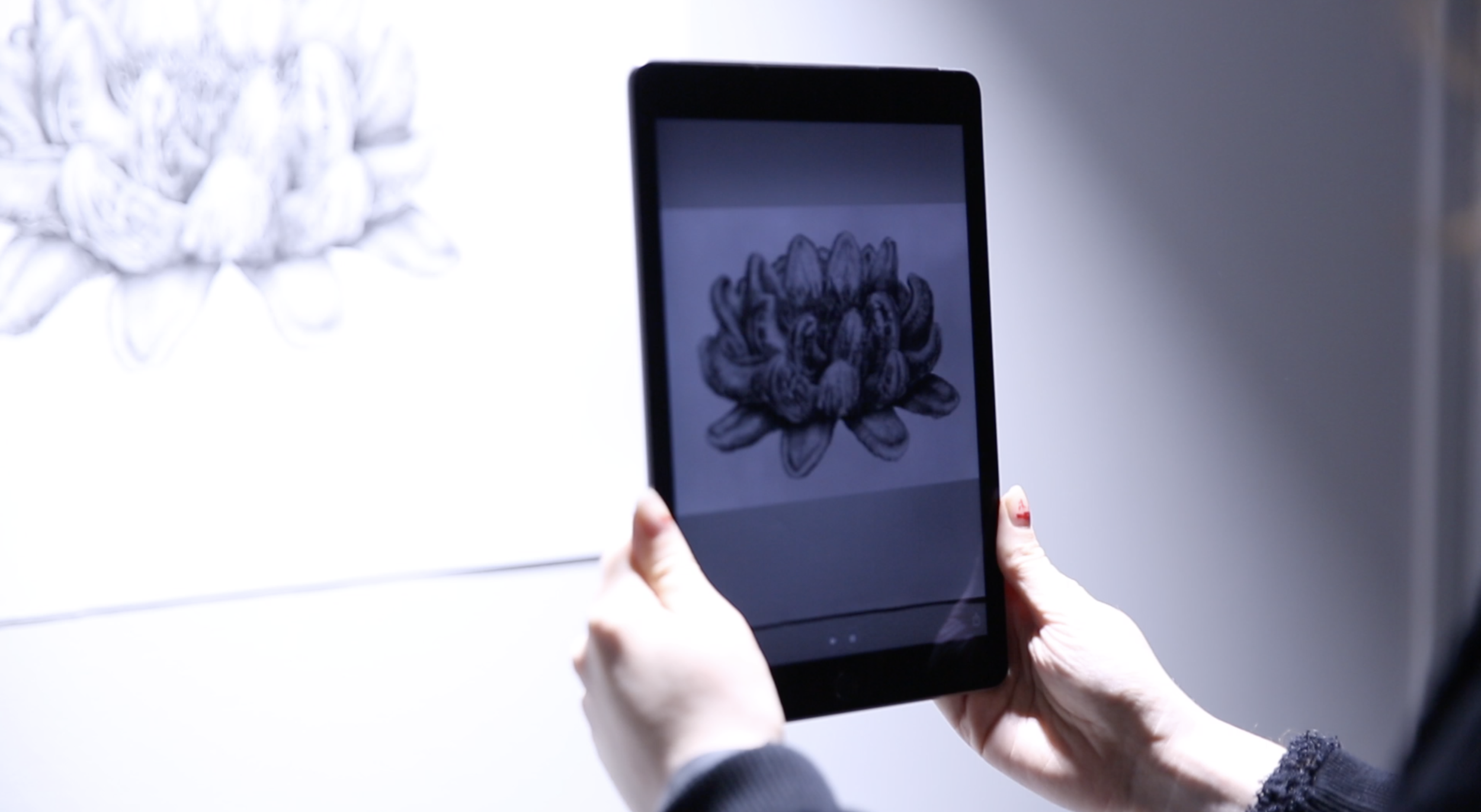

This project titled, “Tongue and Lotus Morph” was created for the Occupy Earth exhibition, held in Vare Gallery at Aalto University. This exhibition was the product of a collaborative course with Parsons University, which explored various topics of anthropocene, climate disruption and our complex relationship with non-human entities.

The piece features a graphite illustration of tongues arranged in the shape of a lotus flower, with stamens protruding from the center. This illustration acts as a trigger for an animation which can be viewed through an augmented reality application on an Ipad. When visitors position the Ipad in front of the illustration, they witness the image come to life, as the tongues slowly coil and unfurl. This animation mimics the blossoming of the flower, as well as the natural motion of a human tongue.

"It is like becoming accustomed to something strange, yet it is also becoming accustomed to strangeness that doesn’t become less strange through acclimation." -Thomas Morton, "Dark Ecology"

VOIDBRINGER AUGMENTED REALITY

In the early 1900's, professors at the University of Art and Design Helsinki would occasionally travel out of the country to acquire unique and exotic textbooks for their classes. The Dekorativ Vorbilder is one such text. Printed in 1894, the illustration and ornamental design book was published in Berlin, at one of the oldest family-run publishers concerning architecture, art, and design in Germany. The book includes dozens of pages of hand-drawn illustrations, vastly ranging in content and style.

Due to conservation practices, this book is stored in the basement of the Aalto Archives and is not easily accessible to students. Driven by the desire to illuminate the intricate illustrations, I have created an Augmented Reality animation that facilitates the rediscovery of the pages of the Dekorativ Vorbilder.

A page from the Dekorativ Vorbilder can be seen on display at the Aalto University Archives. Adjacent to the page is an iPad, which visitors are prompted to pick up and study the page through the front camera lens on the device. The page acts as trigger for an augmented reality animation. The video showcases multiple pages from the Dekorativ Vorbilder, cinematically animated to reflect the mediums and intricate content found in the original artifact. The pages of the once hidden book, are dynamically reinterpreted to engage a new generation of students and visitors.

ELECTRONIC CREATURE

This motorized creature was fabricated for a course titled “Programming for Sculptors,” which taught the basics of utilising an Arduino Micro-Controller to facilitate the construction of kinetic sculptures. For my demo I created a shaggy creature, employing an Arduino, a servo motor and upcycling a rug.

First, I constructed an internal skeleton from small wooden skewers. Second, I cut and attached pieces of the rug onto the various layers of the skeleton. Third, I programmed the motor to move the skeleton at various speeds and increments, resulting in a natural and fluid-like motion that ripples throughout the body of the sculpture.

INTERACTIVE GALAXY

This demo is the result of my first experimentations with Kinect and Processing. For this project I hung a large ream of paper from multiple trees situated in a seaside forest on the Otaniemi Campus. This paper acted as a long overhead screen. Underneath the screen, I positioned two projectors and a Kinect, which was used to track the position of viewers.

When a viewer is underneath the projection they can see their position reflected as a small star in space in the overhead projection. The real-time visualization is created in Processing, which obtains the position of the viewer from the Kinect and utilizes that data to create a kinetic network of stars. As the participant moves, the star follows their movement and connects to other stars in the space, triggering atmospheric bell sounds.

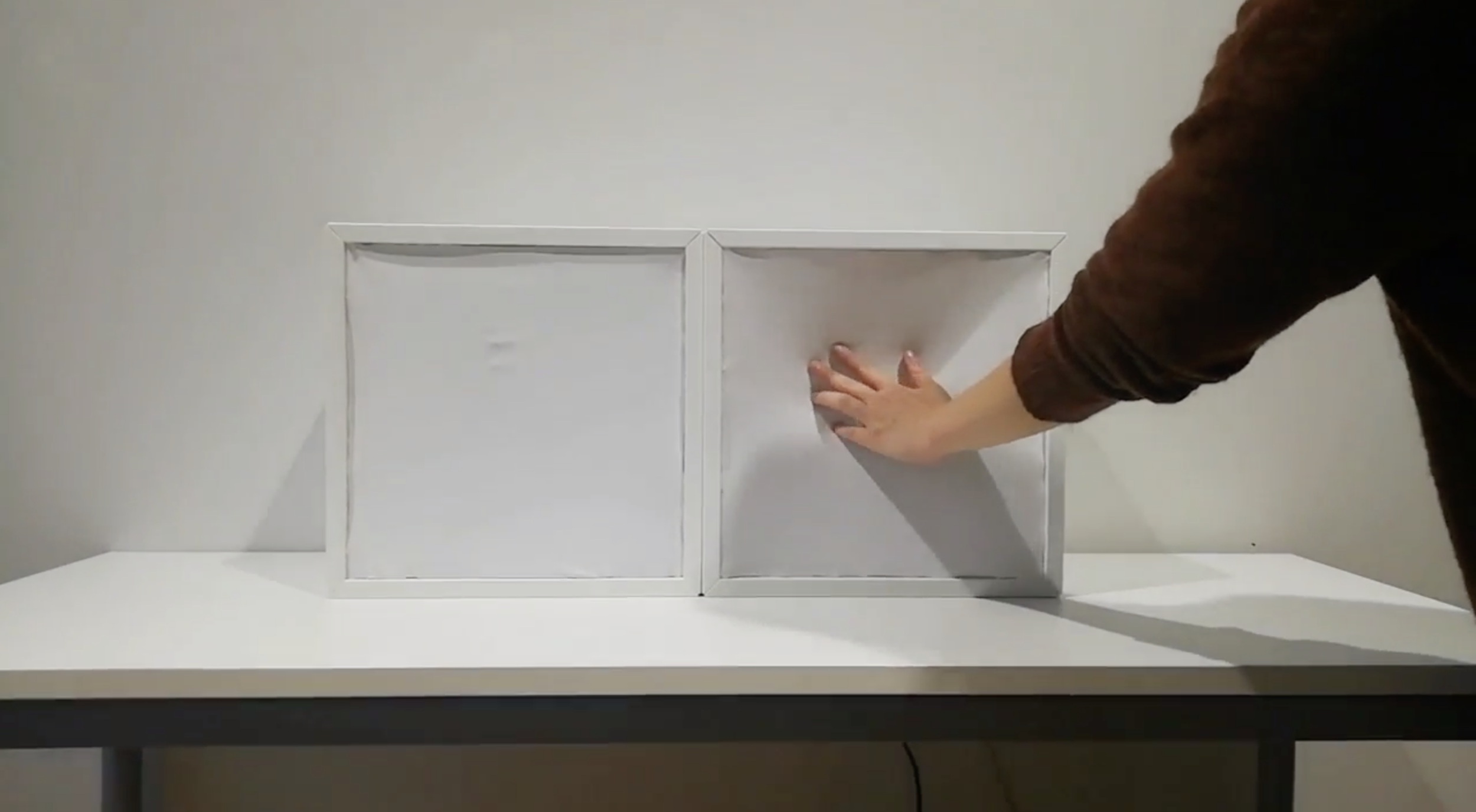

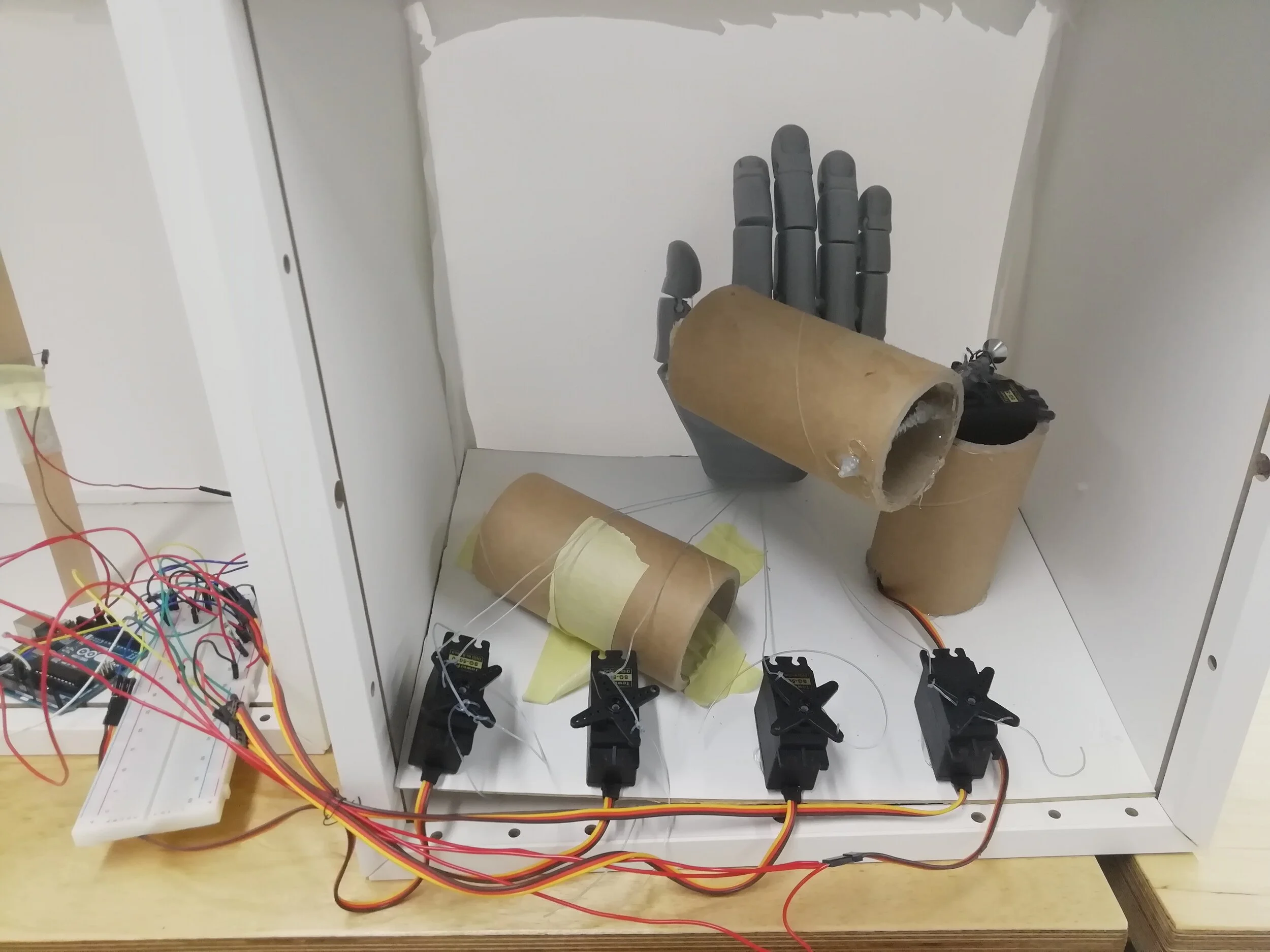

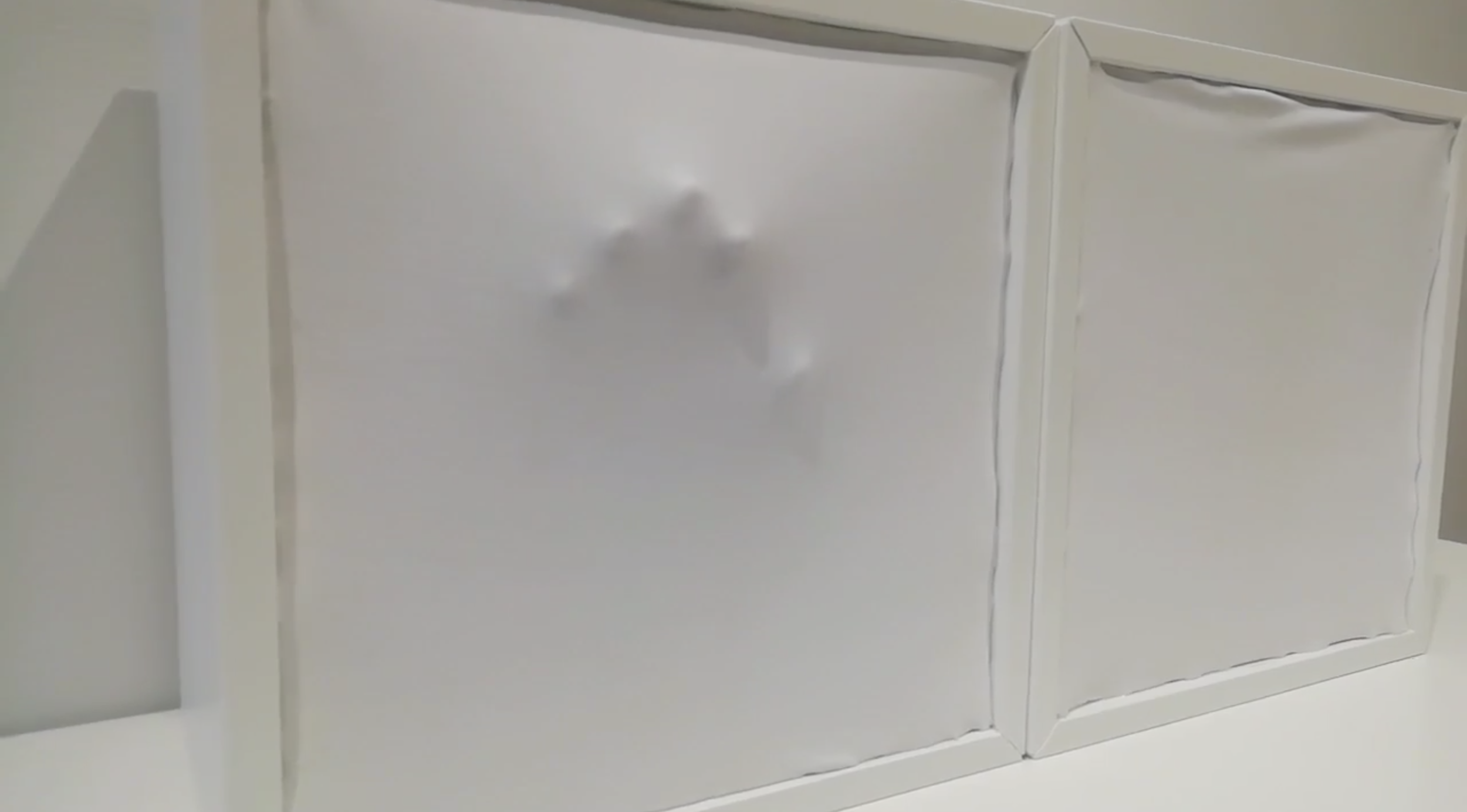

ELECTRONIC HAND

This demo features a 3D printed hand, attached to four servo motors and a distance sensor, positioned inside two white boxes with spandex screens. When a participant reaches their hand to touch the right screen, the distance sensor triggers the motors to enable the robotic hand to touch back on the left screen. The motors are attached to each finger with clear fishing line. When the line is pulled by the motors, it causes each finger to move.

“The first language humans had was gestures. There was nothing primitive about this language that flowed from people’s hands, nothing we say now that could not be said in the endless array of movements possible with the fine bones of the fingers and wrists.” -Nicole Krauss, The History of Love

FACE TRACKING XY PLOTTER

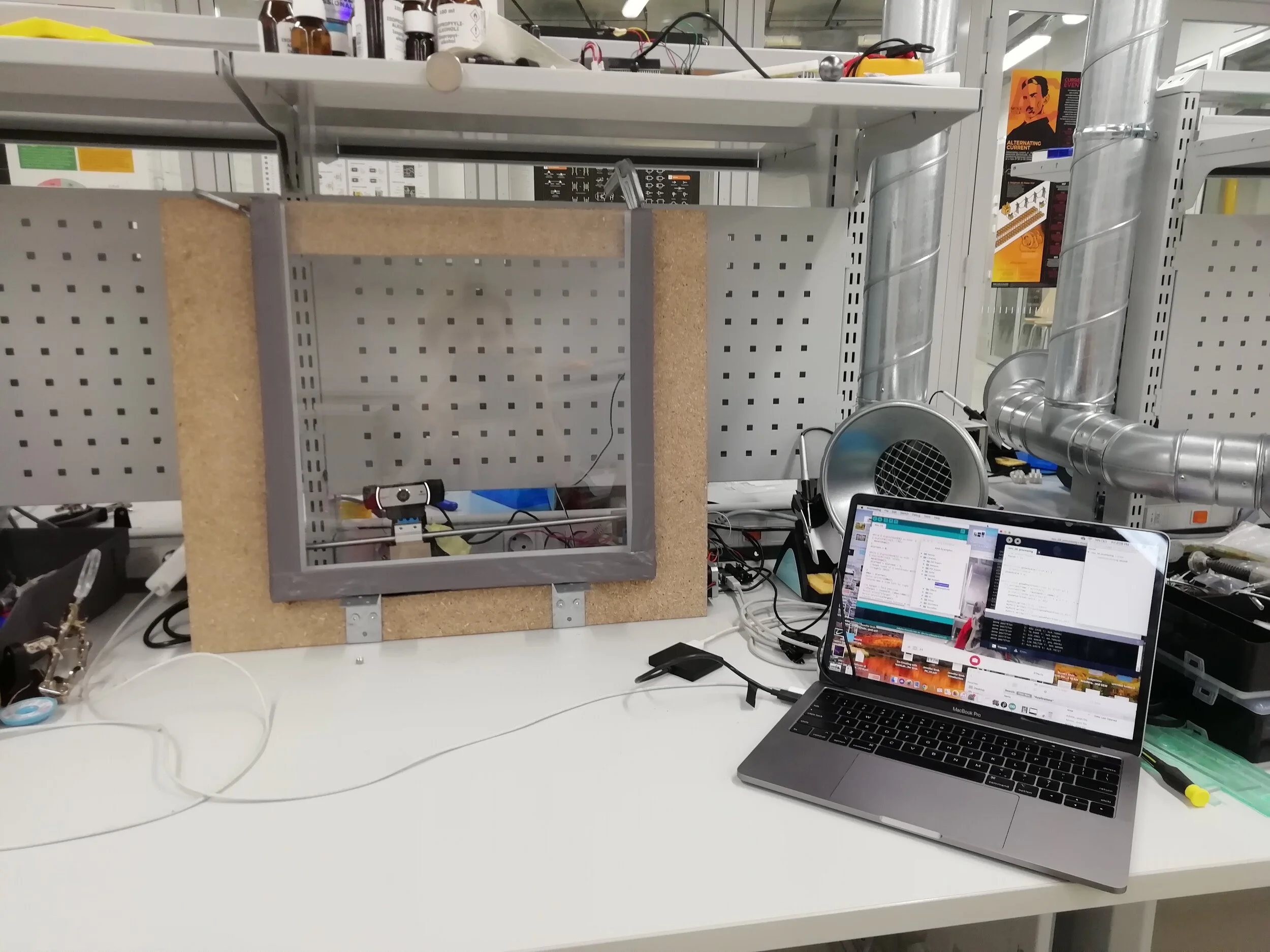

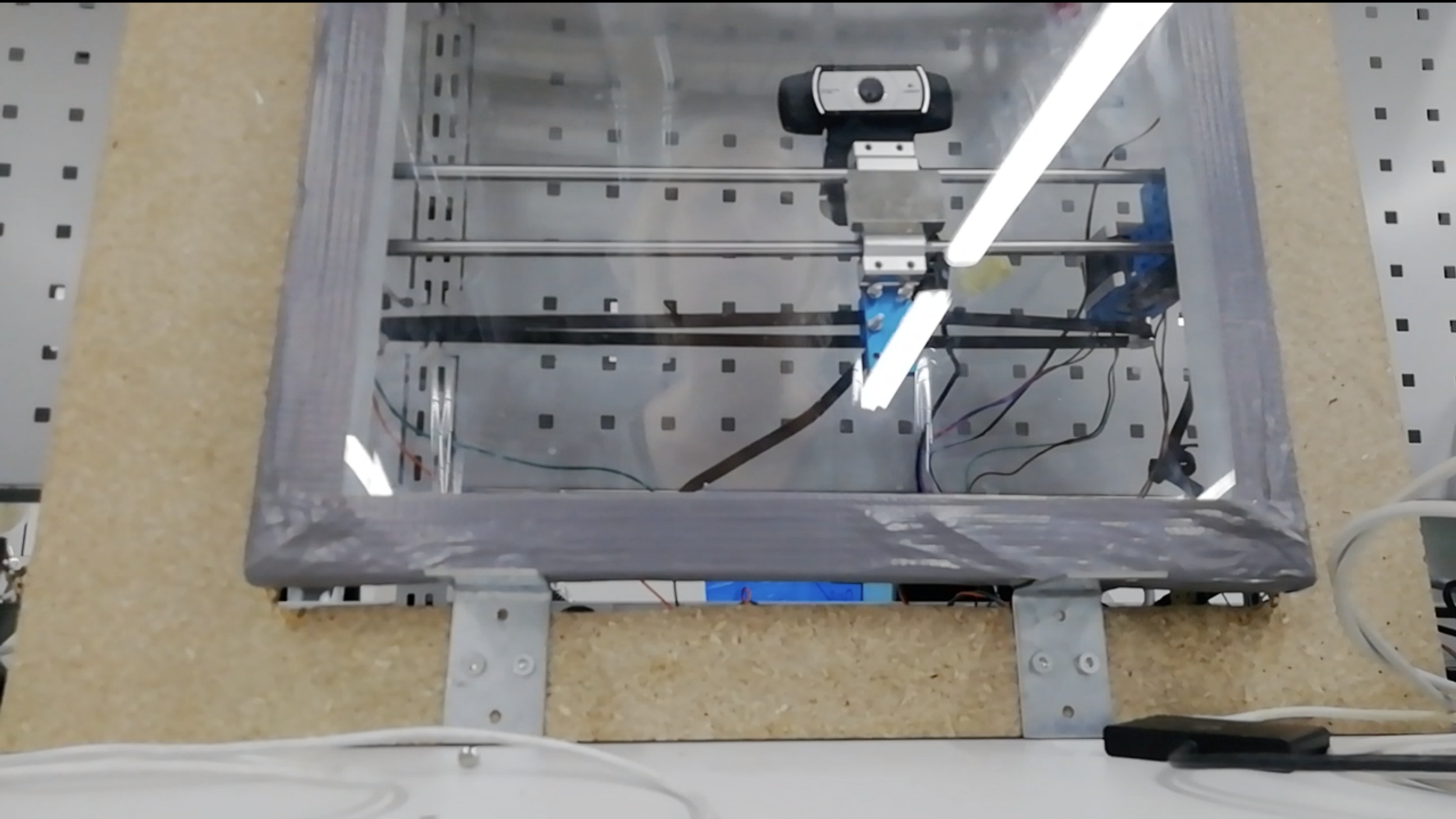

This project utilizes a self-constructed X—Y plotter and webcam to track the face of the viewer as they move in front of the display. The face tracking data is provided by an application called FaceOSC, which communicates with an Arduino, to move the X—Y Plotter in coordination with the viewer’s face.

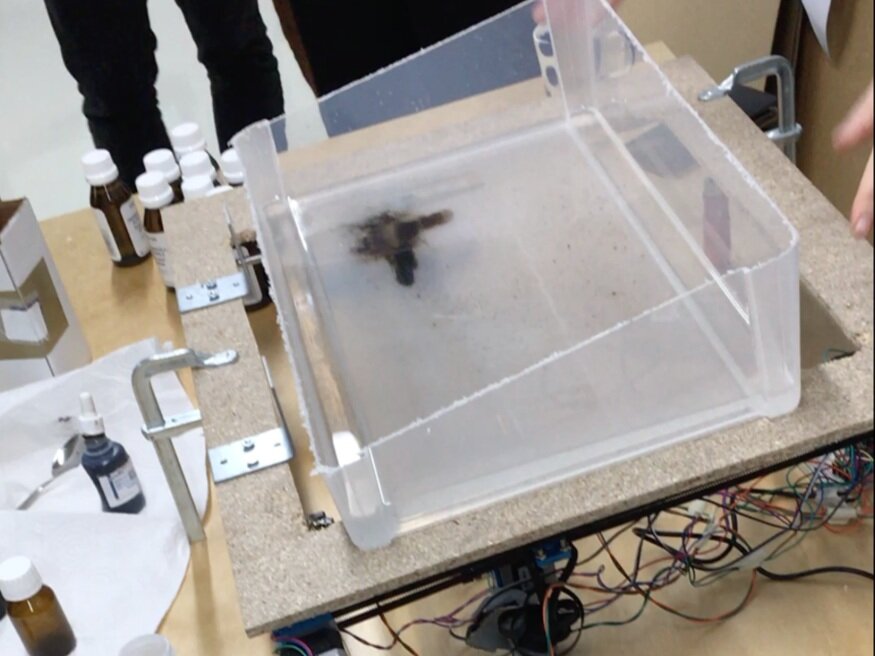

Another demo, as pictured below, shows a magnet being attached to the X—Y plotter, which enables the plotter to move a magnetised liquid known as ferrofluid. The ferrofluid is suspended above the plotter in a plastic container filled with isopropyl, sugar, soap, and water. The result of this process is a magnetised kinetic liquid that reflects the motion of the viewer.